Basketball Shot Trainer App

This is a project that employs computer vision, speech-to-text, time-series analysis, visualization, and LLM prompt engineering to provide feedback to a basketball player as they practice their shot.

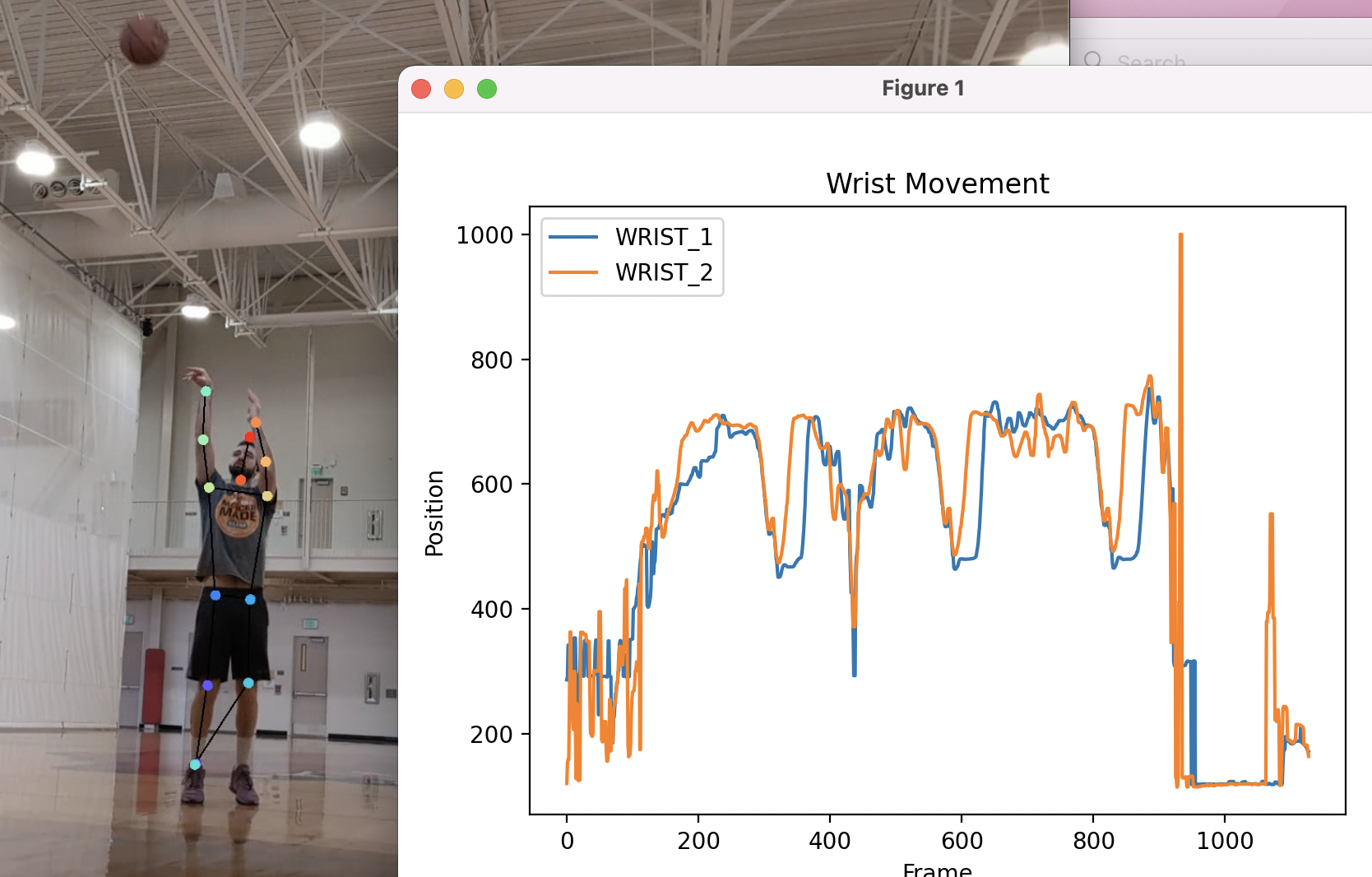

Visualization of DeepLabCut's output during a freethrow attempt.

Motivation

I have long been interested in how computer vision can mesh with biomechanics for various purposes, from creating interactive art displays to improving sport performance, rehabilitation, wildlife tracking, and more. I made this project to get experience working with frameworks relevant to this line of work.

The Data

All good AI projects need to start with data. There are many more data sources I could have collected, but my aim was to make something that other people would be able to use on themselves with minimal budget and setup.

At the end of the day, I wanted to collect biomechanical data for each shot, and a label that determined where the ball ended up: “make” or “miss”. If I did miss, I wanted to record which direction the ball missed to.

So, I devised a plan to capture this: I went to the gym and put on some headphones. I set up my phone camera to record myself shooting freethrows, and set the video to capture audio from my headphones instead of the phone. Then, I got in front of the camera and started shooting freethrows. After each shot, I said aloud either “make”, “left”, “right”, “short”, or “long”.

Extracting Pose Information from Video

My first data-wrangling goal was to extract a useful represention of biomechanic “pose” data for basketball shooting sessions. I researched many APIs to achieve this task, and ultimately decided to choose DeepLabCut because their API will work on huggingface models for non-human animal research also, and I am interested in doing that in the future. After a brief wrestling match with installation and pathing issues, I got my small laptop to successfully run this software on a proof-of-concept 30-second video of myself shooting exactly 3 free throws. For larger videos down the line, I turned to Google Colab for compute resources.

Section 2.1: Interpreting Pose Information

I started with a video of three shots so it would be easy to create and test heuristics to capture a known set of shots. I started by loading the data into a pandas dataframe, visualizing the wrist data of the video, and comparing it to when I actually shot the ball. It became clear that I could tell which actions indicated shots, but there was noise in the data. So, I experimented with filtering the data before defining heuristics for shot detection. I ended up making a custom filtering algorithm that applied a rolling average weighted by Deeplabcut’s confidence score for each joint location.

Side-by-side visualization of the wrist movement over three full shot attempts, with body keypoints overlaid.

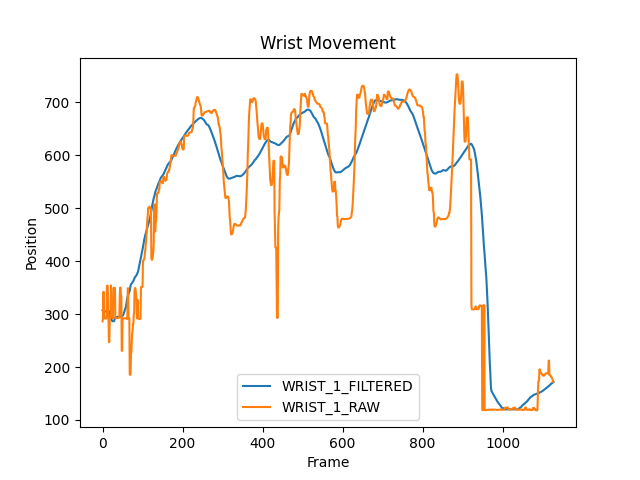

Side-by-side visualization of the wrist movement over three full shot attempts, with body keypoints overlaid. Comparison of raw and smoothed wrist position data to highlight the benefit of applying filtering techniques.

Comparison of raw and smoothed wrist position data to highlight the benefit of applying filtering techniques.I eventually settled on a simple heuristic that detected when both hands were above the forehead. Note that the Y axis is inversed: lower position in the graph is actually higher position in Deeplabcut. Simple heuristics are great when they have real-world meaning, which in this case, it did.

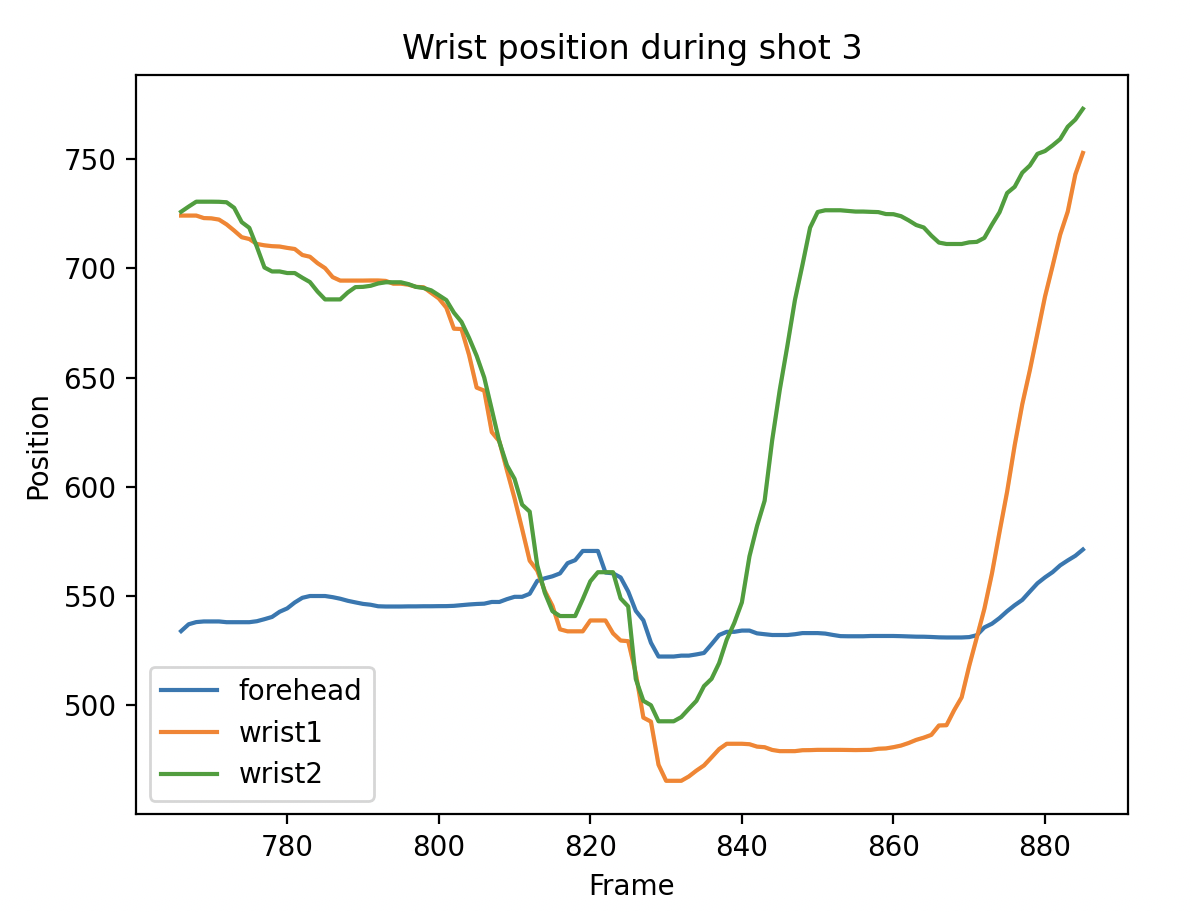

Trajectory of both wrists and the forehead for a single shot, highlighting coordinated movement during release.

Trajectory of both wrists and the forehead for a single shot, highlighting coordinated movement during release.For each detected shot, my code would find the video frame with the peak height of the average of the two wrist positions, and mark it as the shot’s “center”. For each shot, I captured two seconds before and two seconds afterwards – in order to capture both the free throw approach and shot follow-through. In addition to capturing motion data, I used FFMPEG to export the 4-second video clips associated with each shot.

Determining Labels from Audio Data with PyAudio and Vosk

In order to label the shots, I attempted using PyAudio and Vosk. PyAudio allowed me to detect loud sounds (my voice calling out the result of the previous shot), and match them to a limited vocabulary (“make”, “left”, “right”, “short”, “long”). After detecting and labeling shots with audio only, I aligned them with the shots detected through biomechanics, which comprised of a combination of index scaling and lookback heuristics. Once I tested these heuristics with a larger set of 213 shots, 209 were succesfully mapped (98% recall) with no false positive matches.

Neural Networks for Outlier Inspection

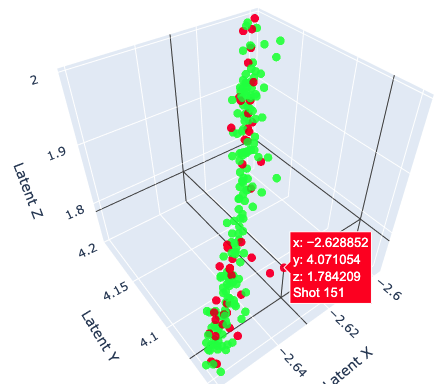

After combining shot labels with segmented biomechanic data from Deeplabcut, I wanted to use neural networks to analyze my shot consistency, and see if I had any bad habits that were associated with missing the shot. So, I compressed each shot (120 timesteps, 14 3-D joint vectors) into one 3-dimensional point using an AutoEncoder in PyTorch. I then plotted all of these points in 3D space, preserving the shot ID and label in the graph to allow quick inspection in the exported video clips.

This graph was made after several iterations of data cleaning, such as trimming off the “follow-through” of the shot (to stop the model from focusing on how my body language “reacts” to the result of the shot), and using Dynamic Time Warping to maximally align the shots temporally.

If you rotate the previous graphic, you will find shot_151 to be a visible outlier.

If you rotate the previous graphic, you will find shot_151 to be a visible outlier. Shot 151: A notable outlier.

Shot 151: A notable outlier.Providing Visual and Natural Language Feedback to Users

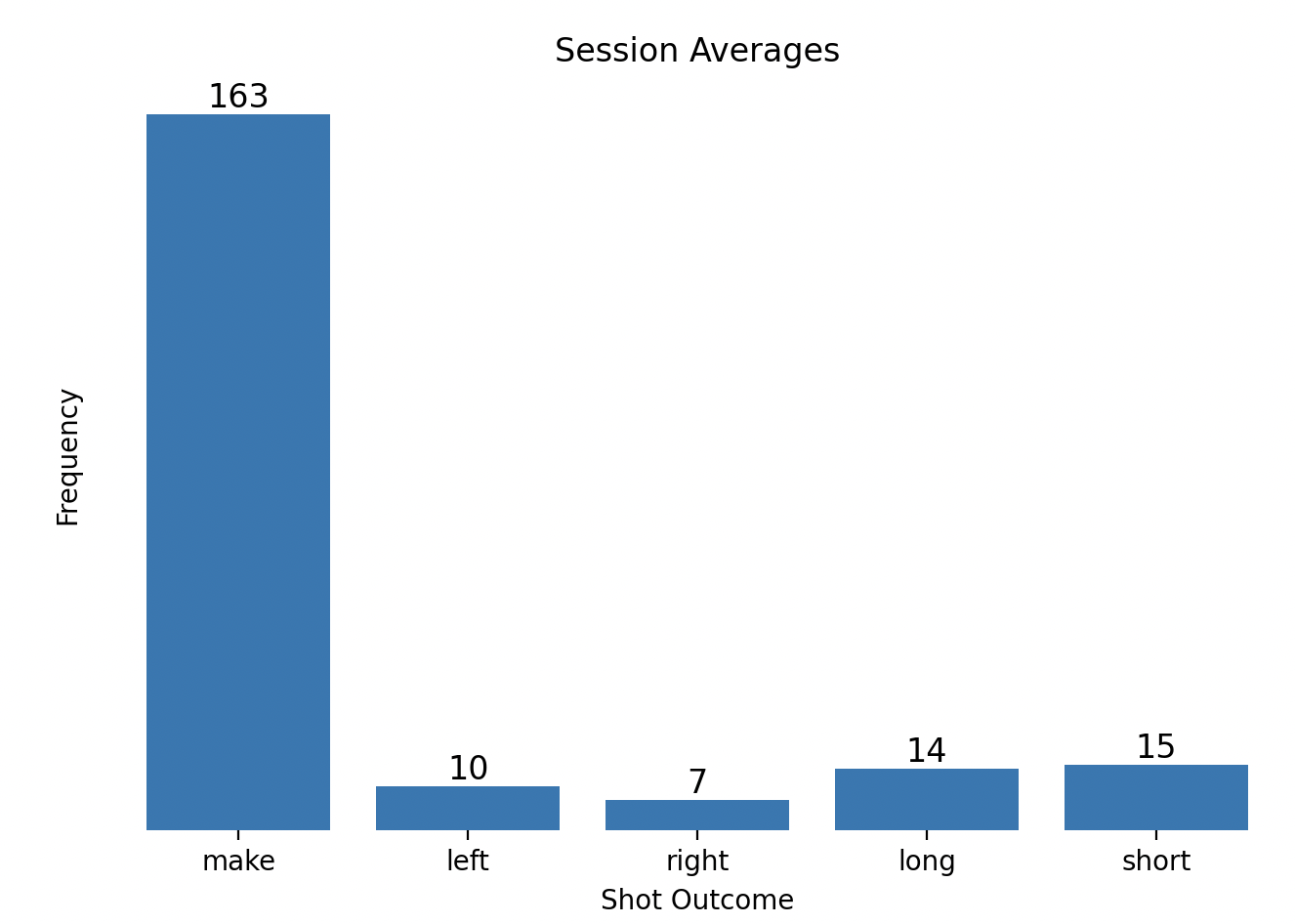

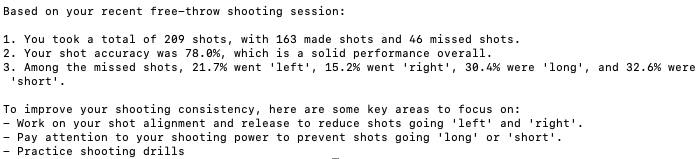

There is plenty of easy feedback to give users based on the audio data alone! For a proof-of-concept, this code calculates various statistics of a given free throw shooting session, and then visualizes them and uses OpenAI’s API to provide feedback in a natural language form.

The tool uses Matplotlib to visualize shot performance during each sesssion, and feeds summary statistics into a formatted prompt to send to an LLM using the openai API.

The tool uses Matplotlib to visualize shot performance during each sesssion, and feeds summary statistics into a formatted prompt to send to an LLM using the openai API.Also, this tool visualizes a players “streakiness”. This may be helpful in showing them how many warmup shots they need to take before getting into rythm, and detecting fatigue if the ball starts to miss over time.

This visualization shows shot performance over time througout a shootaround session. It shows that I started the free throw session shooting the ball too far, and took about 40 shots before I got into a good rhythm. This could provide personalized warmup recommendations.

These visualizations are just some of many ways to present feedback to basketball players as they work on their form. Although I did train a simple RNN classifier to predict whether shots are “made” or “missed”, the model had poor accuracy due to the small amount of data, the consistency in free throw form (having practiced freethrows more than a beginner might have), and the granularity of the captured data. Future work should include using beginners who are working on finding a jump shot form, allowing them to see how different variations of their shooting forms are related to shooting accuracy.

All code will be pushed after we finish user testing in May, but if you would like a copy, please reach out to me and I will send you the code!